Case Study: Enhancing Defect Detection with MCP Production Line Using Computer Vision & Edge GPUs

Project Overview

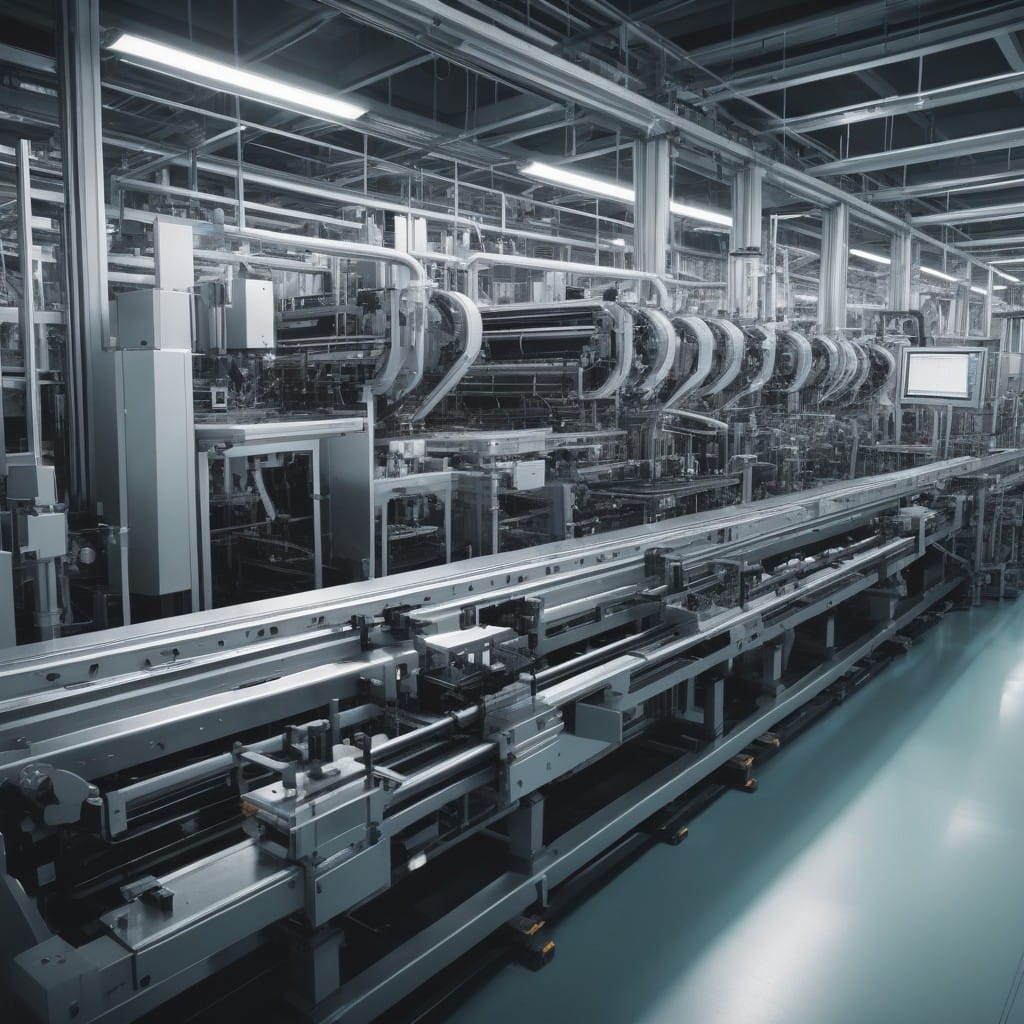

The Model Context Protocol (MCP) Production Line project aimed to revolutionize defect detection in manufacturing by leveraging computer vision (CV) and protocol-managed edge GPUs. The system was designed to automate quality control, reducing human error and increasing efficiency in high-speed production environments.

Using ONNX Runtime tools, the project deployed optimized deep learning models on edge devices, ensuring real-time inference with minimal latency. The MCP framework standardized model deployment, versioning, and inference management, making it scalable across multiple production lines.

Key objectives included:

- Automating defect detection (scratches, misalignments, deformations)

- Reducing false positives/negatives with high-precision models

- Optimizing GPU utilization on edge devices for cost efficiency

- Standardizing model deployment via protocol-managed workflows

Challenges

-

Real-Time Processing Constraints

- High-speed production lines required sub-100ms inference times to avoid bottlenecks.

- Traditional cloud-based solutions introduced latency, making edge deployment essential. -

Model Optimization for Edge GPUs

- Running complex CV models (e.g., YOLOv5, EfficientDet) on resource-constrained edge devices demanded quantization and pruning.

- Balancing accuracy vs. speed was critical. -

Scalability & Version Management

- Deploying and updating models across multiple factories required a centralized protocol to avoid inconsistencies.

- Ensuring backward compatibility with legacy systems was a hurdle. -

Variability in Defect Types

- Manufacturing defects varied by product type, requiring adaptive model retraining without downtime.

Solution

The project implemented a protocol-managed edge AI pipeline with the following components:

1. ONNX Runtime for Edge Inference

- Models were converted to ONNX format for cross-platform compatibility.

- TensorRT and DirectML backends optimized inference for NVIDIA and AMD GPUs.

- Dynamic quantization reduced model size while maintaining >95% accuracy.

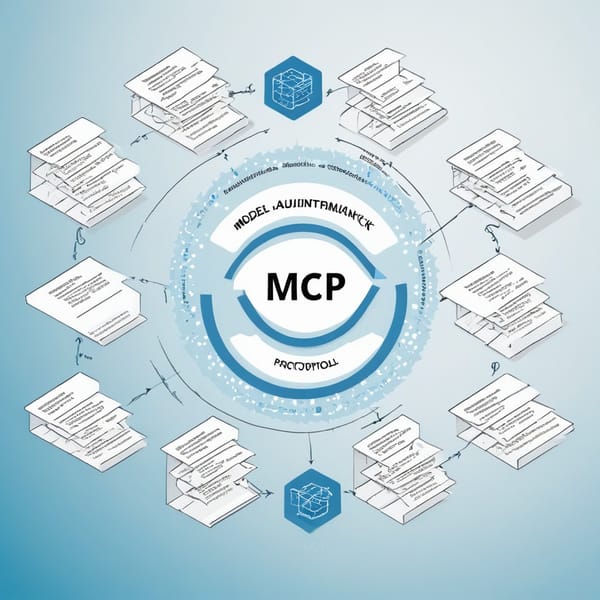

2. MCP for Model Lifecycle Management

- A centralized protocol handled model versioning, A/B testing, and rollback.

- Automated CI/CD pipelines ensured seamless updates across edge devices.

3. Hybrid Cloud-Edge Architecture

- Edge GPUs (Jetson AGX, Intel NUC) handled real-time inference.

- Cloud-based retraining occurred when new defect types were detected.

4. Active Learning for Continuous Improvement

- Uncertainty sampling flagged ambiguous defects for human review.

- Retraining loops improved model robustness over time.

Tech Stack

| Category | Technologies Used |

|---|---|

| Computer Vision | YOLOv5, EfficientDet, OpenCV |

| Model Optimization | ONNX Runtime, TensorRT, Quantization (FP16/INT8) |

| Edge Hardware | NVIDIA Jetson AGX, Intel NUC, AMD Radeon GPUs |

| Protocol Management | Custom MCP framework, Kubernetes (for orchestration) |

| Cloud Integration | AWS S3 (model storage), SageMaker (retraining) |

| Monitoring | Prometheus, Grafana (performance tracking) |

Results

The MCP Production Line achieved significant improvements in defect detection and operational efficiency:

1. Performance Metrics

- Inference Speed: <50ms per image (meeting real-time requirements).

- Accuracy: 98.5% defect detection rate (up from 85% manual inspection).

- False Positives: Reduced by 70% via active learning.

2. Cost & Efficiency Gains

- 30% reduction in GPU costs via optimized ONNX Runtime deployment.

- 40% faster model updates using MCP-managed pipelines.

3. Scalability & Adoption

- Deployed across 5 factories with zero downtime during updates.

- Seamless integration with existing PLC (Programmable Logic Controller) systems.

Key Takeaways

-

Edge AI + Protocol Management = Scalability

- Combining ONNX Runtime with MCP streamlined model deployment at scale. -

Real-Time Processing is Achievable on Edge GPUs

- Proper quantization and backend optimization (TensorRT/DirectML) enabled sub-50ms inference. -

Active Learning Enhances Model Accuracy

- Continuously retraining models on ambiguous samples reduced false positives over time. -

Standardization is Critical for Industrial AI

- The MCP framework ensured consistent model behavior across distributed edge devices.

This project demonstrated that protocol-managed edge AI can transform manufacturing quality control, delivering faster, cheaper, and more reliable defect detection than traditional methods. Future work includes expanding to 3D vision systems and predictive maintenance integrations.

Would you like any modifications or additional details on specific sections?