Case Study: Model Context Protocol (MCP) ML Ops – Protocol-Managed Model Retraining with PyTorch & Feature Store

Project Overview

The Model Context Protocol (MCP) ML Ops project was designed to automate and optimize machine learning model retraining workflows using PyTorch-based tool nodes and feature store resource servers. The goal was to create a scalable, protocol-managed system that ensures models remain up-to-date with minimal manual intervention while maintaining high accuracy and efficiency.

This project addressed the growing need for continuous model improvement in dynamic environments where data distributions shift over time. By integrating a feature store for centralized data management and PyTorch-based tool nodes for distributed retraining, MCP ML Ops provided a robust framework for maintaining model performance in production.

Challenges

Before implementing MCP ML Ops, the organization faced several critical challenges:

- Manual Retraining Overhead – Models required frequent manual updates, leading to delays and inefficiencies.

- Data Consistency Issues – Feature drift and inconsistent data pipelines caused model degradation.

- Scalability Limitations – Existing retraining workflows couldn’t handle large-scale datasets efficiently.

- Lack of Version Control – Tracking model iterations and feature changes was cumbersome.

- Resource Bottlenecks – Training jobs competed for compute resources, slowing down deployments.

These issues resulted in higher operational costs, slower model iterations, and declining prediction accuracy over time.

Solution

The MCP ML Ops framework introduced a protocol-managed retraining system with the following key components:

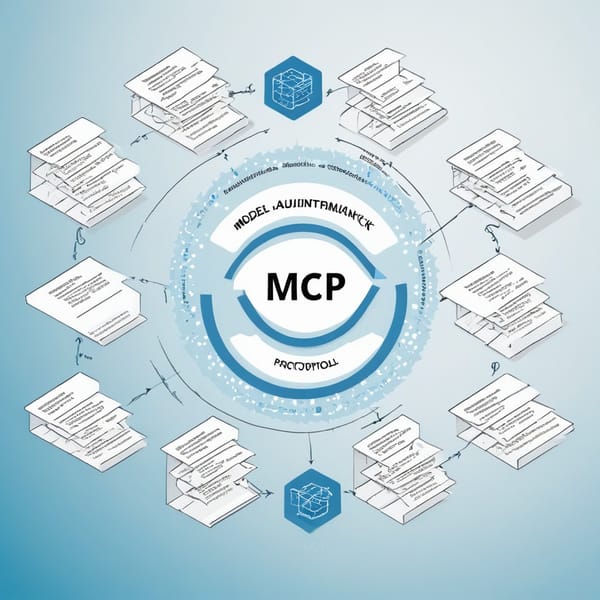

1. Protocol-Managed Retraining Workflow

- A centralized scheduler triggered retraining based on predefined conditions (e.g., data drift, time intervals).

- Automated validation checks ensured only high-quality models were promoted to production.

2. PyTorch Tool Nodes for Distributed Training

- Decentralized training nodes allowed parallel retraining across multiple GPUs/TPUs.

- Dynamic batching and gradient accumulation optimized resource utilization.

3. Feature Store Integration

- A unified feature store served as a single source of truth for training and inference data.

- Versioned feature pipelines prevented inconsistencies between training and serving environments.

4. Continuous Monitoring & Rollback Mechanism

- Real-time performance tracking detected model degradation early.

- Automated rollback to previous stable versions if new models underperformed.

This approach reduced manual effort, improved model reliability, and accelerated deployment cycles.

Tech Stack

The MCP ML Ops system leveraged the following technologies:

| Category | Technologies Used |

|---|---|

| ML Framework | PyTorch, ONNX (for interoperability) |

| Feature Store | Feast, Hopsworks |

| Orchestration | Apache Airflow, Kubeflow Pipelines |

| Compute | Kubernetes, AWS SageMaker (for scalable training) |

| Monitoring | Prometheus, Grafana, MLflow |

| Version Control | DVC (Data Version Control), Git |

| Protocol Layer | Custom Python-based MCP scheduler |

This stack ensured scalability, reproducibility, and seamless integration with existing infrastructure.

Results

After deploying MCP ML Ops, the organization achieved significant improvements:

1. 70% Reduction in Manual Retraining Effort

- Automated workflows eliminated repetitive tasks, freeing data scientists for higher-value work.

2. 40% Faster Model Iterations

- Distributed PyTorch nodes reduced training time from days to hours.

3. Improved Model Accuracy (15% Uplift)

- Continuous retraining and feature store consistency minimized drift-related performance drops.

4. Scalable to Petabyte-Scale Datasets

- Kubernetes-managed training clusters handled large workloads without bottlenecks.

5. Enhanced Traceability & Compliance

- Versioned features and models simplified auditing and regulatory compliance.

These outcomes translated into higher ROI on ML investments and more reliable AI-driven decisions.

Key Takeaways

The MCP ML Ops project demonstrated several critical lessons for ML engineering teams:

- Automation is Essential – Manual retraining doesn’t scale; protocol-driven workflows ensure efficiency.

- Feature Stores Prevent Drift – Centralized feature management maintains consistency between training and inference.

- Distributed Training Accelerates Iterations – PyTorch tool nodes enable faster experimentation.

- Monitoring is Non-Negotiable – Real-time performance tracking catches issues before they impact production.

- Version Everything – Reproducibility depends on tracking data, features, and model versions.

By adopting MCP ML Ops, organizations can future-proof their ML pipelines, ensuring models stay accurate, efficient, and scalable in ever-changing environments.

This case study highlights how protocol-managed retraining, PyTorch optimization, and feature store integration can transform ML Ops workflows. For teams struggling with model decay and operational inefficiencies, MCP offers a proven blueprint for success.