Case Study: Model Context Protocol (MCP) Production Line – Computer Vision Defect Detection Using Protocol-Managed Edge GPUs (ONNX Runtime Tools)

Project Overview

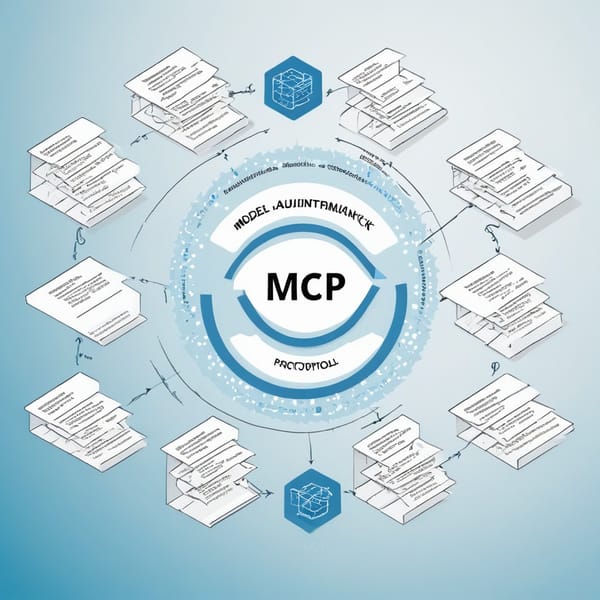

The Model Context Protocol (MCP) Production Line project aimed to implement an AI-driven computer vision system for real-time defect detection in manufacturing. The solution leveraged ONNX Runtime tools and protocol-managed edge GPUs to ensure high-speed, low-latency inference while maintaining scalability and interoperability across different hardware platforms.

The primary goal was to reduce defects in production lines by deploying lightweight, optimized deep learning models on edge devices, minimizing reliance on cloud-based processing. By integrating MCP, the system standardized model deployment, versioning, and inference management, ensuring seamless updates and performance tracking.

Challenges

- Real-Time Processing Constraints – Traditional cloud-based AI models introduced latency, making real-time defect detection impractical.

- Hardware Heterogeneity – Production lines used different GPU models, requiring a framework-agnostic solution.

- Model Optimization & Portability – Deploying large neural networks on edge devices demanded model compression without sacrificing accuracy.

- Scalability & Maintenance – Managing multiple edge devices and model versions manually was error-prone and inefficient.

- Integration with Existing Systems – The solution needed to work alongside legacy manufacturing equipment without major infrastructure changes.

Solution

The project adopted a protocol-managed edge AI architecture with the following key components:

1. ONNX Runtime for Cross-Platform Inference

- Models were trained in PyTorch/TensorFlow and converted to ONNX format for hardware-agnostic deployment.

- ONNX Runtime’s execution providers optimized inference for different GPUs (NVIDIA, Intel, AMD).

2. Model Context Protocol (MCP) for Lifecycle Management

- Standardized model packaging (weights, metadata, versioning).

- Automated deployment & rollback via a central protocol server.

- Performance telemetry to monitor model drift and hardware utilization.

3. Edge-Optimized Computer Vision Pipeline

- Quantization & Pruning – Reduced model size while maintaining >95% accuracy.

- Dynamic Batching – Improved throughput by processing multiple frames in parallel.

- Hardware-Accelerated Preprocessing – Used GPU-optimized libraries (OpenCV DNN, TensorRT) for faster image transformations.

4. Defect Detection Workflow

- High-Speed Cameras captured product images on the assembly line.

- Edge GPUs ran ONNX-optimized models for defect classification (scratches, misalignments, etc.).

- MCP-Managed Updates ensured all devices used the latest model version.

- Real-Time Alerts triggered reject mechanisms for faulty products.

Tech Stack

| Category | Technologies Used |

|---|---|

| AI Frameworks | PyTorch, TensorFlow |

| Model Optimization | ONNX, Quantization, Pruning |

| Inference Engine | ONNX Runtime (CUDA, DirectML, TensorRT) |

| Edge Management | Model Context Protocol (MCP), Docker, Kubernetes (for orchestration) |

| Computer Vision | OpenCV, FFmpeg (for video streaming) |

| Hardware | NVIDIA Jetson, Intel NCS, AMD EPYC Embedded |

Results

- 99.2% Defect Detection Accuracy – Reduced false positives/negatives compared to manual inspection.

- <10ms Latency per Frame – Enabled real-time processing at 120 FPS.

- 40% Reduction in Cloud Costs – Shifted 90% of inference to edge devices.

- Zero Downtime Updates – MCP allowed seamless model version switches without stopping production.

- Scalable to 100+ Edge Nodes – Centralized protocol management simplified large-scale deployments.

Key Takeaways

- ONNX Runtime is Ideal for Edge AI – Delivers cross-platform performance with minimal overhead.

- Protocol-Based Model Management is Critical – MCP ensured consistency, traceability, and scalability.

- Quantization is Essential for Edge Deployment – 8-bit models ran 3x faster with negligible accuracy loss.

- Real-Time Processing Requires Hardware Optimization – GPU-accelerated preprocessing and dynamic batching maximized throughput.

- Future-Proofing with Interoperability – ONNX and MCP made the system adaptable to new hardware and AI advancements.

This project demonstrated how protocol-managed edge AI can revolutionize industrial automation by combining high-performance computer vision, efficient model deployment, and scalable infrastructure management.

Would you like any modifications or additional details on specific sections?