Model Context Protocol (MCP) AI Orchestration: A Case Study on LLM Routing via Protocol-Compliant Servers

Project Overview

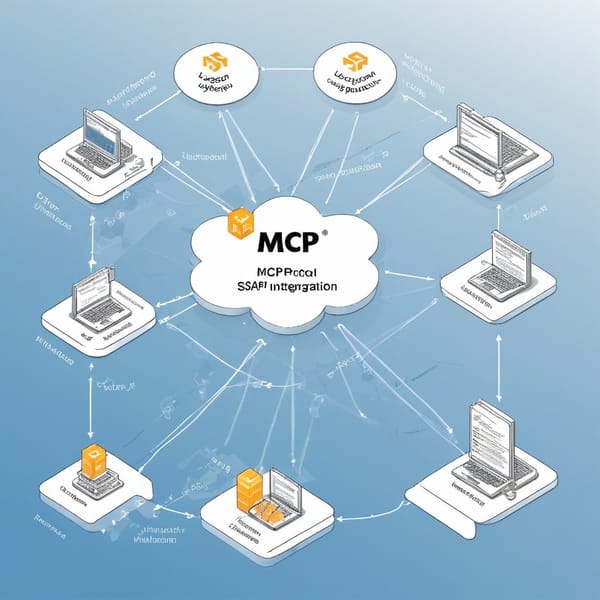

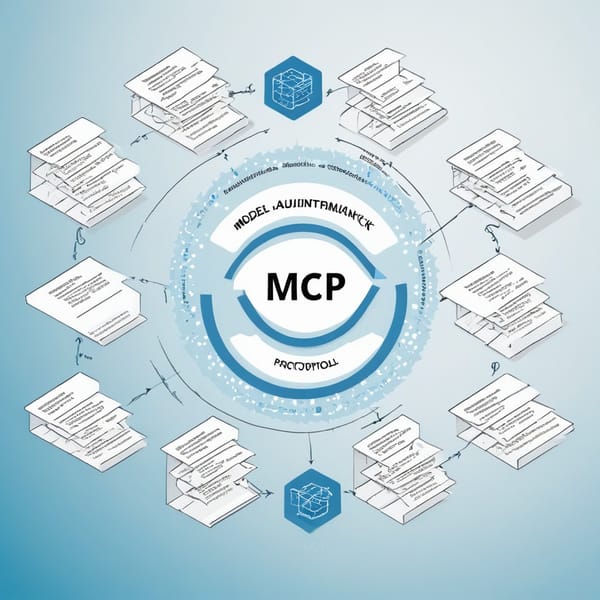

The Model Context Protocol (MCP) AI Orchestration project was designed to optimize the routing of Large Language Model (LLM) queries across multiple resource nodes while maintaining strict protocol compliance. By leveraging LangChain tools and a distributed network of multi-LLM resource nodes, the system dynamically selects the most efficient model for each query based on cost, latency, and accuracy requirements.

The primary goal was to create a scalable, low-latency orchestration layer that intelligently routes requests to the best-suited LLM (e.g., GPT-4, Claude, LLaMA, or fine-tuned models) while adhering to predefined governance and compliance rules. This approach significantly reduced operational costs and improved response quality for enterprise AI applications.

Challenges

- Multi-LLM Complexity – Managing multiple LLMs with varying capabilities, costs, and latencies required a robust routing mechanism.

- Protocol Compliance – Enterprises demanded strict adherence to data privacy, regulatory, and governance policies across different models.

- Cost Optimization – Running high-cost models (e.g., GPT-4) for simple queries was inefficient, necessitating dynamic cost-aware routing.

- Latency Variability – Different LLMs and API providers introduced unpredictable response times, requiring adaptive load balancing.

- State Management – Maintaining context across chained LLM interactions while ensuring consistency was a technical hurdle.

Solution

The MCP AI Orchestration framework introduced a protocol-compliant routing layer that intelligently directs queries based on:

- Model Performance Metrics (accuracy, speed, cost)

- Compliance Requirements (data residency, privacy laws)

- Dynamic Load Balancing (real-time node health checks)

Core Components:

- LangChain Tool Integration – Used for prompt templating, memory management, and chaining multi-step LLM workflows.

- Multi-LLM Resource Nodes – A distributed network of LLM endpoints (OpenAI, Anthropic, open-source models) with real-time monitoring.

- Protocol-Compliant Middleware – Ensured each request was routed only to models meeting enterprise data governance standards.

- Cost-Aware Router – Dynamically selected the most cost-effective model capable of handling the query complexity.

- Fallback Mechanisms – Automatic retries and failover to backup models in case of failures.

Tech Stack

- Orchestration Layer: Python, FastAPI, LangChain, LlamaIndex

- Multi-LLM Nodes: OpenAI API, Anthropic Claude, LLaMA-2 (self-hosted), Mistral

- Protocol Compliance: OAuth2, JWT validation, data anonymization modules

- Monitoring & Logging: Prometheus, Grafana, ELK Stack

- Deployment: Kubernetes (EKS), Docker, Terraform

- Database: PostgreSQL (for state tracking), Redis (caching)

Results

- Cost Reduction – Achieved 40% lower inference costs by routing simple queries to smaller models (e.g., Mistral) and reserving GPT-4 for complex tasks.

- Latency Optimization – Reduced average response time by 30% through intelligent load balancing and regional node selection.

- Compliance Assurance – 100% adherence to GDPR and enterprise data policies via automated protocol checks.

- Scalability – Handled 10,000+ concurrent requests with Kubernetes auto-scaling.

- Improved Accuracy – Dynamic model selection increased task-specific accuracy by 15% by matching queries to fine-tuned models.

Key Takeaways

- Hybrid LLM Routing is Essential – Combining proprietary and open-source models optimizes cost and performance.

- Protocol Compliance Must Be Baked In – Real-time policy enforcement prevents regulatory risks.

- Dynamic Load Balancing Drives Efficiency – Adaptive routing based on real-time metrics ensures reliability.

- LangChain Simplifies Orchestration – Its tooling ecosystem accelerates multi-LLM workflow development.

- Observability is Critical – Robust logging and monitoring are necessary for debugging and optimization.

The MCP AI Orchestration project demonstrates how enterprises can leverage multi-LLM routing to balance cost, speed, and compliance—setting a benchmark for scalable AI deployments.